The One-million-image Pose Estimation Dataset – First Part

This dataset is a demonstration cornerstone for advancing autonomous satellite navigation and was generated with DLVS3.

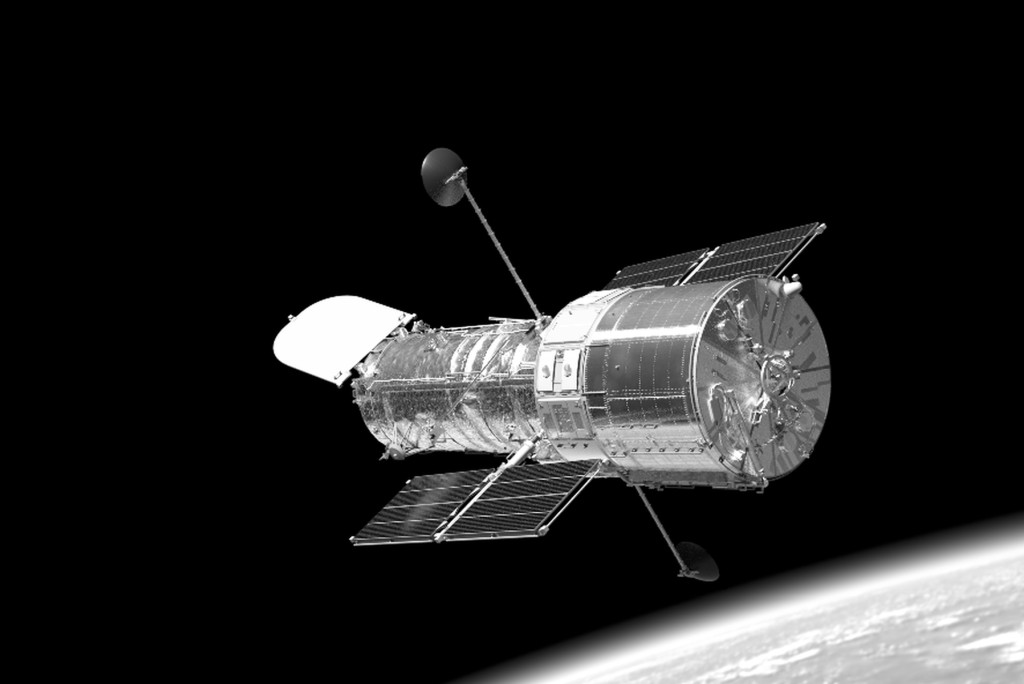

The HST was chosen because it is one of the best documented and most visited satellite in the history of spaceflight, with thousands of photos available for visual inspection. However, it is important to note that very special photos were taken during the STS missions to capture as many details as possible: – the Space Shuttle, the HST, the position of the Earth and the Sun is not random, during the documentation they tried to ensure homogeneous lighting conditions. The Sun rarely illuminates the HST directly; most of the time, it is covered by the Shuttle, whose large white surfaces reflect the Earth’s light at a wide angle.

Also, an important warning: the chaser satellite is also part of the scene! Its illuminated surface can give additional light to the target satellite and can be seen on the target’s reflective surfaces.

The first part of the dataset contains 640.000 synthetic floating-point HDR multichannel images in OpenEXR format at a resolution of 1024×1024. The following data channels are included for each sample:

- Color Image: This image presents the direct rendering of the Hubble Space Telescope in a 16-bit floating-point RGB format. It represents the raw output of the rendering process, without any post-processing effects such as glow or exposure adjustments. This ensures a physically accurate representation of the scene’s color information.

- CameraNormal: This image encodes the surface normal vectors of the Hubble Space Telescope as seen from the camera’s perspective. Stored in a 32-bit floating-point RGB format, each pixel’s RGB values represent the X, Y, and Z components of the normal vector at that surface point in camera space (where the camera’s origin is at 0, 0, 0). This data is crucial for understanding the object’s orientation relative to the viewer.

- Depth: This grayscale image represents the distance of each visible point on the Hubble Space Telescope from the camera in meters. The data is stored in a 32-bit floating-point format, where each pixel’s intensity value corresponds to the depth at that pixel. This channel offers direct information about the spatial layout of the scene along the viewing direction.

- FullMask: This is an 8-bit unsigned integer (uint8) grayscale mask image indicating the presence of the Hubble Space Telescope within the rendered view. Pixels with a non-zero value denote areas where the telescope is visible, while zero-valued pixels represent the background. This mask provides a basic segmentation of the foreground object.

- DetailedMask: This grayscale image provides detailed semantic segmentation of the Hubble Space Telescope into its constituent parts. Stored as an 8-bit unsigned integer (uint8), each pixel value corresponds to a specific component of the HST according to a previously defined mapping

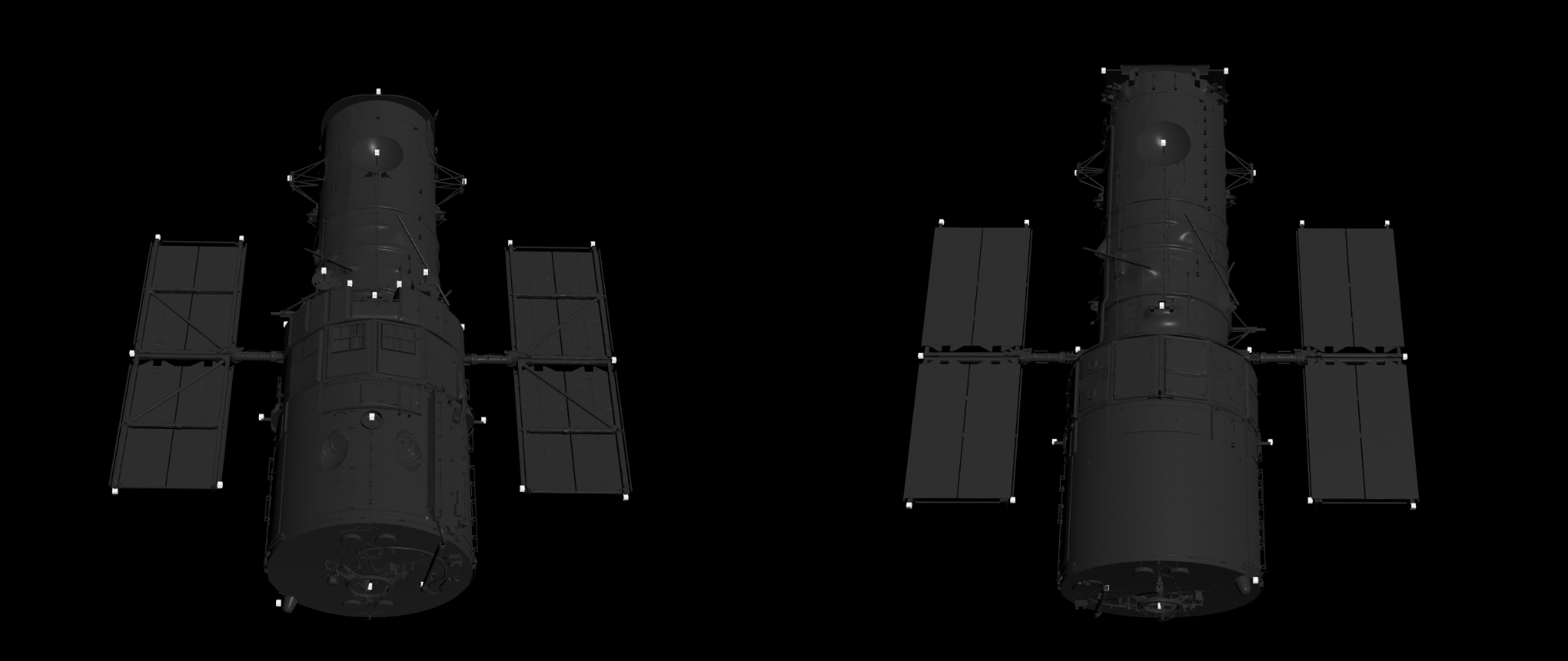

Keypoints

The 3D model of the Hubble Space Telescope used in this dataset is annotated with a set of 37 distinct keypoints. These keypoints are strategically positioned on significant features and extremities of the model. While the current count is fixed at 37, it’s important to note that the number and placement of these keypoints can be adjusted in future iterations or for specific application requirements.

The primary purpose of these keypoints is to provide a sparse yet informative representation of the Hubble’s 3D pose and spatial extent. By tracking the 2D projections of these 3D keypoints in the rendered images, it becomes possible to estimate the telescope’s orientation and position relative to the camera. The distribution of these 37 keypoints is designed to capture the overall structure and silhouette of the Hubble Space Telescope effectively, ensuring that the entire model is well-defined and delimited within the 3D space and its 2D projections.

The OpenEXR (.exr) format

This is a versatile and powerful image format, particularly well-suited for storing high-dynamic-range (HDR) imagery and multiple image elements within a single file. A key advantage of the EXR format, as utilized by the DLVS3 studio rendering pipeline, is its ability to embed various image buffers or “layers” within a single file. This means that for each rendered scene, the .exr file encapsulates more than just the final color image. It efficiently stores different image components.

Furthermore, the EXR format offers significant flexibility in terms of data representation for each of these components. Unlike conventional image formats with fixed channel counts and data types, EXR allows each embedded layer to have an arbitrary number of channels (e.g., single-channel grayscale for depth, three channels for RGB or normals) and to be stored with different numerical precisions. In this dataset, you can expect to find data represented as:

- uint8: 8-bit unsigned integers (though less common for the primary EXR data in HDR workflows).

- float16: 16-bit floating-point numbers (likely used for the color information to preserve dynamic range).

- float32: 32-bit floating-point numbers (commonly used for geometric data like normals, positions, and depth for higher precision).

Depth map

The EXR contains the “depth” parts, which store crucial spatial information about the rendered scenes. It’s important to understand how the pixel values in these images relate to the actual 3D data, as they may not appear intuitively as standard color or grayscale images when viewed directly.

These files utilize a 16-bit floating-point (float16) representation for pixel values. In this context, a pixel value of 0.0 typically corresponds to black, and a value of 1.0 corresponds to pure white. However, the nature of floating-point data allows for values outside this normalized range.

- Values less than 0.0: These values will generally be interpreted as black.

- Values greater than 1.0: These values will generally be interpreted as white.

Regarding the Depth Image:

The Depth stores the distance of each visible point on the Hubble Space Telescope from the camera in meters. In this dataset, the typical distance of the telescope from the camera ranges approximately from 10 to 30 meters. Since these depth values are significantly larger than 1.0, when visualized directly as an image with a 0.0-to-1.0 mapping, the entire Hubble Space Telescope will likely appear completely white. The actual depth information is encoded in the floating-point values, not directly in the perceived grayscale intensity. To interpret the depth accurately, you will need to read the raw float16 pixel values.

Normal map

We use the “standard” normal map conventions:

Unit Normal vectors corresponding to the u,v texture coordinates are mapped onto normal maps. Only vectors pointing towards the viewer (z: 0 to -1 for left-handed orientation) are present, since the vectors on geometries pointing away from the viewer are never shown. The mapping is as follows:

- X: -1 to +1 : Red: 0 to 255

- Y: -1 to +1 : Green: 0 to 255

- Z: 0 to -1 : Blue: 128 to 255

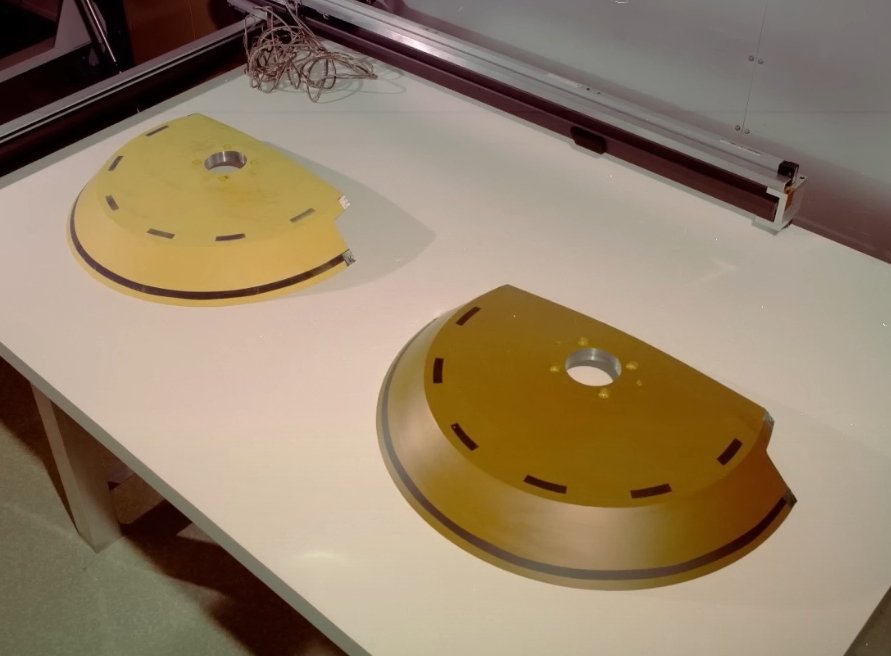

Randomized materials

The material properties of the Hubble Space Telescope model were subtly altered in every image. This randomization of material characteristics further enhances the dataset’s variability. This is extremely important because we know very little or nothing about the condition of the materials used over a 25-30 year period.

Similarly, the MLI wrinkles are unknown, so we procedurally rendered a different state in each image. The reflectivity and roughness of the surfaces also change due to atomic oxygen and micrometeorites impacts.

Note: Autoplay is affected by Google’s Autoplay Policy on Chrome Browsers. Play the animation manually in this case.

The goal of randomization is to walk around the event space around the unknown reality and thus provide a more robust solution. Therefore, it should be noted that selecting an arbitrary image from the database is not guaranteed to look completely realistic.

Background and light sources

For the sake of realism, it is extremely important to consider not only the Sun as the primary light source when rendering scenes, but also the light reflected from the Earth. In addition, since the Earth from the distance of LEO orbit is a surface filling nearly 180 percent of the sky, the polished and smooth surfaces of the satellites also reflect its details – clouds, dry lands, oceans…

To mimic perfect reflections, we created a 360-degree environment map in each case, storing the light energy coming from the given directions. The following example shows the HDR environment from -17EV to +5EV with 1EV steps:

Note: Autoplay is affected by Google’s Autoplay Policy on Chrome Browsers. Play the animation manually in this case.

The colorspace is always linear in our EXR files. To get a final image to train, additional post-processing functions are required:

- setting the exposure time

- possibly scaling to another color space (for example: sRGB using a 2.2 gamma, or grayscaling).

In addition, several augmentations are recommended:

- blooming,

- lens flare,

- light streaks,

- additional sensor noises, etc.

Examples of these are shown in the database appendix in a jupyter notebook.

Metadata

The positions of the images in the database follow the true path of the Hubble Space Telescope starting at 06:46:00 on 29/04/2025. We took a random time from each 5-minute interval and rendered it at 100 perturbed chaser and target positions. Each 1000-image subset contains the daytime portion of one orbit. The next image continues from the next dawn, so the HST has completed 640 orbits in the entire dataset.

The recorded informations:

- The position of the Sun

- The position of the Earth

- The position and rotation of the HST

- The position of the camera, the direction vector and the up vector of the viewport

- The exact time

(These data are in the IAU_EARTH kernel and UTC time, but the positions have been shifted due to the z-buffer requirements of the rendering so that the HST is at the coordinate center 0,0,0)

- 37 Keypoint 3d in camera world coordinates

- 37 Keypoint 2d screen coordinates

- Target quaternion and translation from camera pov

Time remaining until early access release

- 00Days

- 00Hours

- 00Minutes

- 00Seconds